Training Foundations: A Practical Path for a Growing Carbon Market

Nature‑based restoration in the UK is still young. As a result, there is not a vast amount of ground‑truth local project data. For context, as of January 2025, there has only been one peatland project that has reached year‑5 verification under the Peatland Code (PC). For the Woodland Carbon Code (WCC) this number stands at around 182 projects; however, even this data is often gated because of privacy concerns.

Moreover, where data is collected, it comes in many shapes and forms. Some projects are shifting to high‑resolution drone outputs and investing in LiDAR or photogrammetry‑based digital elevation models, while others are still processing 12.5 cm RGB tiles from aerial flights.

This reality shapes everything about how we build and ship geospatial AI at New Gradient and Calterra.

What we need

First, we need a model that can learn a general understanding of the underlying data distribution from a small number of samples. In other words, learning on tree‑count labels from restored Juniper in England should be sufficient for the model to transfer to Caledonian Pine in Scotland. Second, the AI models must retain performance across diverse source data.

Our answer: Geospatial Foundation Modelling.

We use a mix of open‑source and in‑house trained foundation models (FMs) that power task‑specific learners. This lets us quickly bootstrap to many downstream applications and generalise performance even with small datasets. The backbone is also a multisource, multiresolution learner, allowing us to handle the various inputs currently used in practice.

Multiple studies show that models that first learn from large pools of unlabeled imagery and only then see a small set of audited examples reach the same, or better, accuracy with far fewer labels than training from scratch. For example, one widely cited study showed that with ~1% of labels, a pretrained model could match or surpass a baseline trained with 100% of labels on a standard land‑cover benchmark. For compliance‑grade geospatial work, that label‑efficiency is decisive.

Our Training Process

1) Data and normalisation

- Sources: UK‑wide RGB and depth (DSM/DTM) imagery from drone, aerial, and satellite.

- Geometry: unify CRS, verify alignment, correct residual ortho issues.

- Depth cleanup: DSM detrending to emphasise local relief; distribution transforms for outlier handling and alignment with RGB data.

- Tiling & indexing: window scenes into training tiles; retain metadata.

- Augmentations: flips/rotations - careful to preserve ecology‑relevant cues.

2) Self‑supervised pretraining

To create foundation models we need to adopt a learning task that allows models to see vast amounts of domain data and understand it without requiring any extensive labelling efforts. This kind of learning strategy is called self-supervised learning - ie, we are not supervising the model with labels. The task we use is masked image modelling: hide patches, ask the network to reconstruct them. With RGB + height, the model learns structure (edges, textures, boundaries) and form (micro‑topography) without any labels. The model in a way gains an overarching understanding of Earth Observation (EO) data semantics.

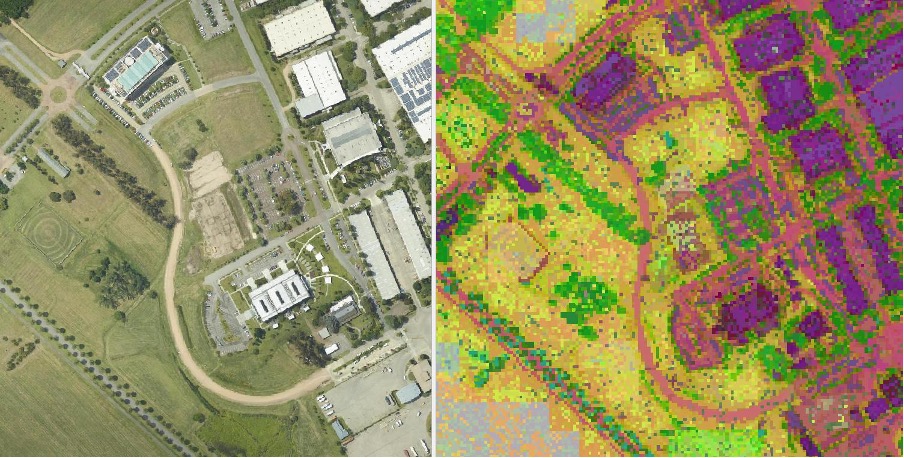

The figure below from an open-source project shows how these self-supervised learning tasks can help create this human-like perception in models. We can see that feature clustering on frozen embeddings of pretrained models already shows semantic grouping (trees, roads, fields, built areas) even before fine‑tuning (supervision). The model is itself able to learn underlying semantics of geospatial data, which makes it a much better and quicker learner on downstream geospatial analysis tasks - say, detecting all trees in an image. Hence, it has a learnt “foundation” in geospatial data.

3) Scale & infrastructureCompute

- 10× NVIDIA A40 GPUs, mixed precision, gradient checkpointing.

- Data scale: ~1.8 million tiles across the UK.

From Foundation to “Expert” Models

The pretrained encoders are the backbone. On top, we attach focused heads (“experts”) with clear, bite‑sized jobs. This reduces scope and fallibility.

What Foundation Modelling + Experts Buy Us?

Rapid adaptation: new domain, new indicators, new regulatory shifts, or new geographies; we adapt robust foundational learners instead of rebuilding from the ground up.

SOTA even in low‑label regimes: high‑quality, audited annotations are enough to reach target accuracy.

Lower failure modes via task segmentation: we deploy many expert models that focus on small tasks to mitigate errors and increase performance.

Interpretability: some experts predict intermediate measures (canopy height, species detection, bare‑peat extent) to ensure visibility into factors influencing carbon numbers and decision‑making around project quality.

Direct indicators: certain experts also predict direct measures-for example, imagery‑to‑biomass estimates or wetness indices. We believe models can sometimes create better intermediate abstractions than humans, and these measures, although not yet compliance‑ready, should flow alongside more stringent measurement protocols. Deep learning models have already shown an RMSE under 30 on biomass estimation, laying a strong foundation for more high‑frequency dMRV that meets upstream reporting timelines.

Recap

UK restoration needs models that are accurate and resilient. Foundation models give us that stability. We adapt them into small expert models for specific compliance‑grade tasks to reduce fallibility and improve continuously with new data, without starting from scratch.